Featured Projects

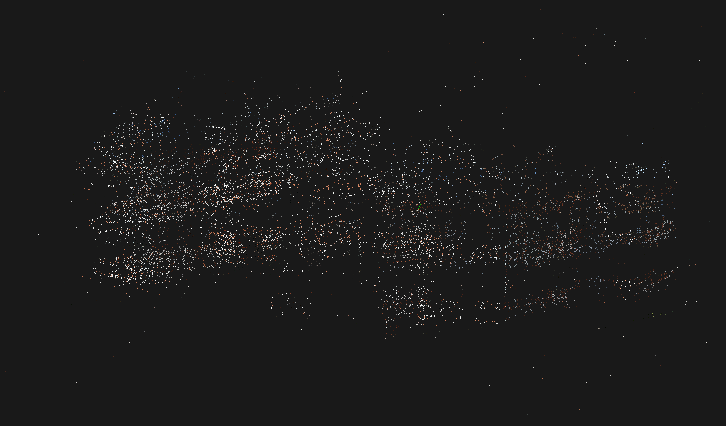

Structure from Motion

Developed a basic structure-from-motion framework. For pairwise motion estimation and triangulation, OpenCV was used. To perform global bundle-adjustment, Ceres was used. Generates a sparse point cloud representing scene geometry.

code

High Dynamic Range using CRF reconstruction

Developed a HDR image generator that uses a set of SDR images captured using different shutter speeds. Essentially, the Camera Response Function is recovered which maps pixel values to exposure values. Using the CRF, a the radiance values are tone-mapped to generate an HDR image. The algorithm was based on Debevec, et. al. 1997.

code report

Autonommous driving using Deep Reinforcement Learning

Used a canonical actor-critic method - Deep Deterministic Policy Gradients to train an agent to follow lanes. The training was done in a simulation environment using the Carla Simulator. The work was primarily motivated by Kendall et. al. Major changes were done to shape the reward signal. Also, an attempt was made to incorporate the partial observability of the environment by using recurrent neural networks.

codereport

Dronet Activation Maps

Added support for visualizing the decisions made by the Dronet neural network by publishing activation maps. The activation maps signify the regions of an image which contribute most to the decision made the neural network. It was based on Grad-CAM: Visual Explanations from Deep Networks via Gradient-based Localization. The project was to determine how well Dronet generalises for a ground vehicle. The experiments were performed on a Polaris GEM e6.

code

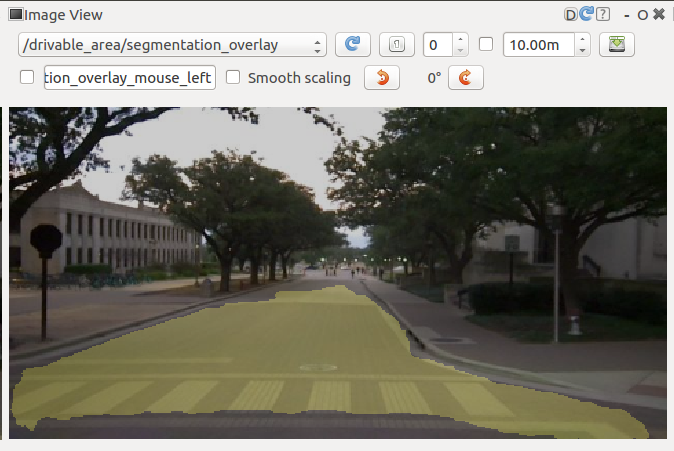

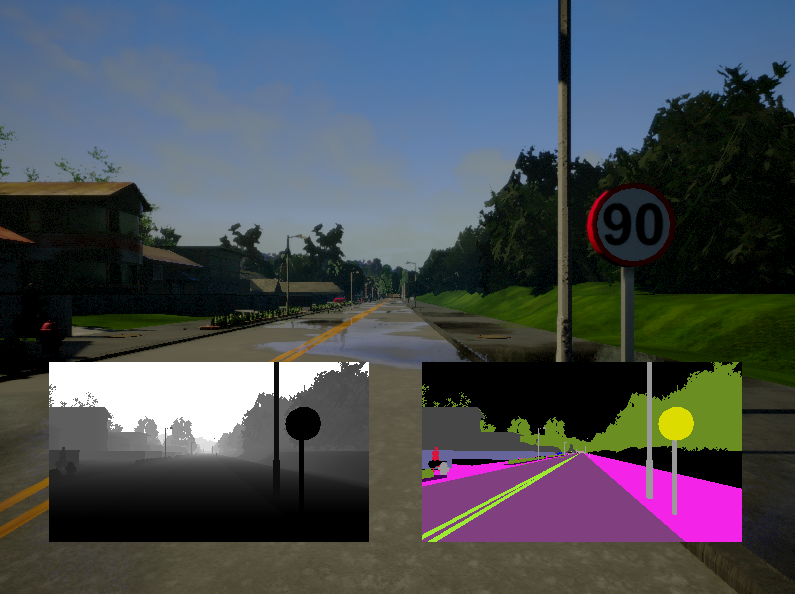

Drivable Area (Ongoing)

The aim of this project is to derive a driving policy directly from visual space. Currently, a segmentation network has been trained on a subset of the BDD100K dataset. The segmentation network uses a SqueezeNet backbone.

code

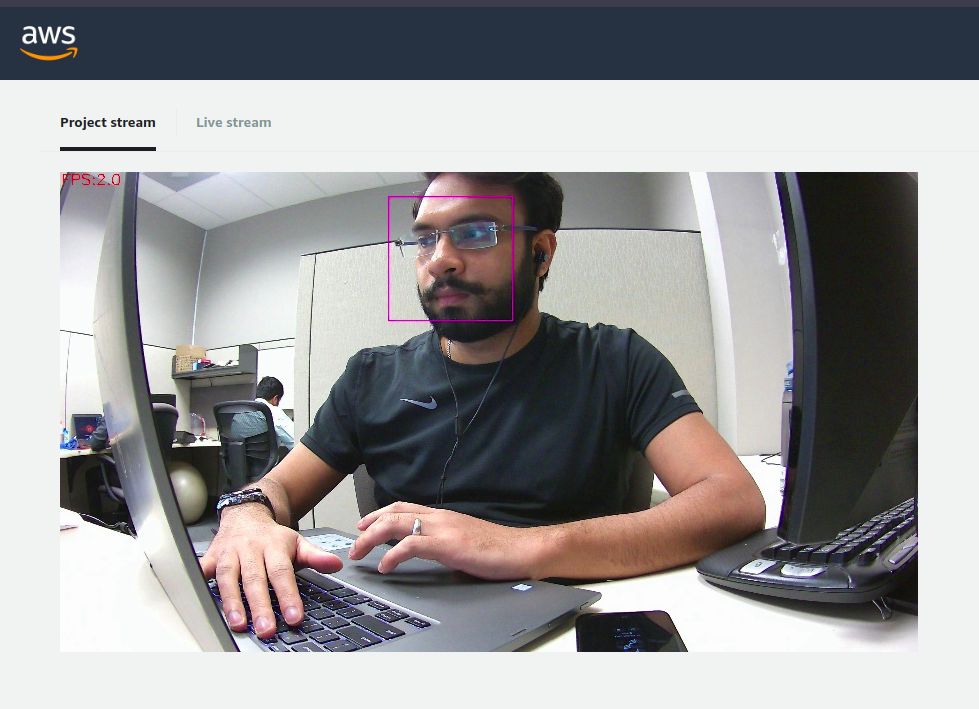

Face-Recognition Module

"Face Trigger” (FT) is a framework for building applications that rely on face-recognition. It enables the development of an end-to-end pipeline for training a machine learning classifier for the purposes of identifying people’s faces. The model was based on a deep neural network inspired from FaceNet. The project was implemented as a python package, to be used to develop other applications. As a POC, a real-time face recognition application was developed on AWS DeepLens.

codedocumentationapp

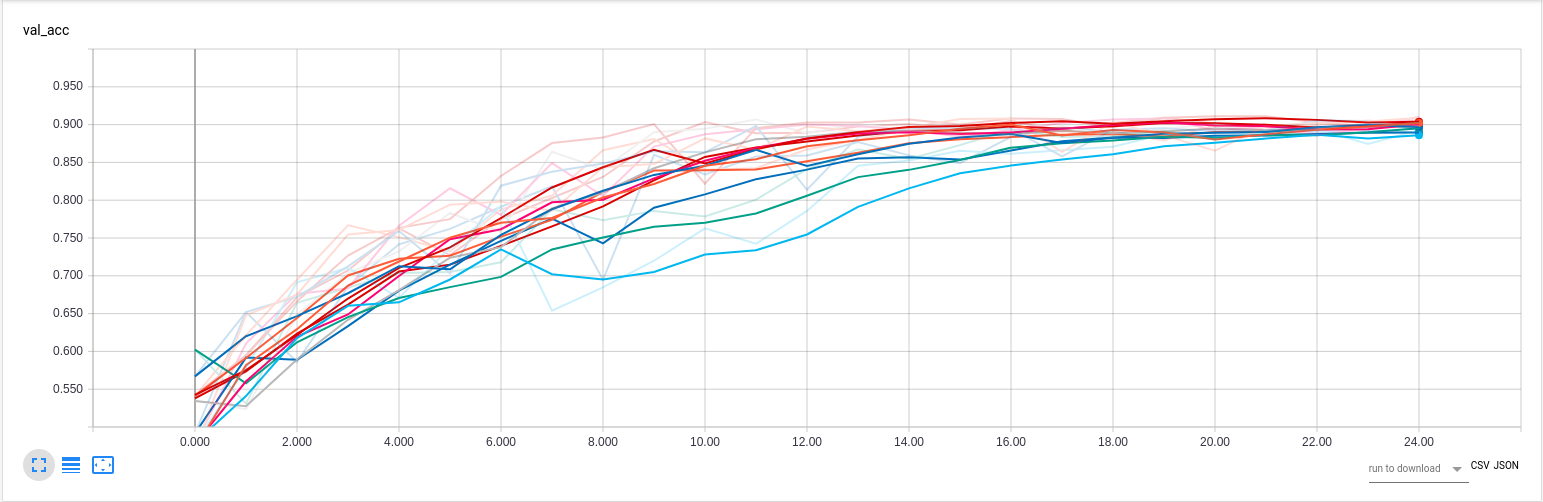

Human Activity Recognition

The aim of the project was to develop a machine learning model to recognise human activities: stand, walk, walk upstairs, walk downstairs, sit and run. Many models were developed including classical methods like SVM and various neural network architectures like CNN, LSTM, CNN-LSTM, ConvLSTM. The dataset used was the UCI HAR dataset. The trained model was deployed in an Android application.

code

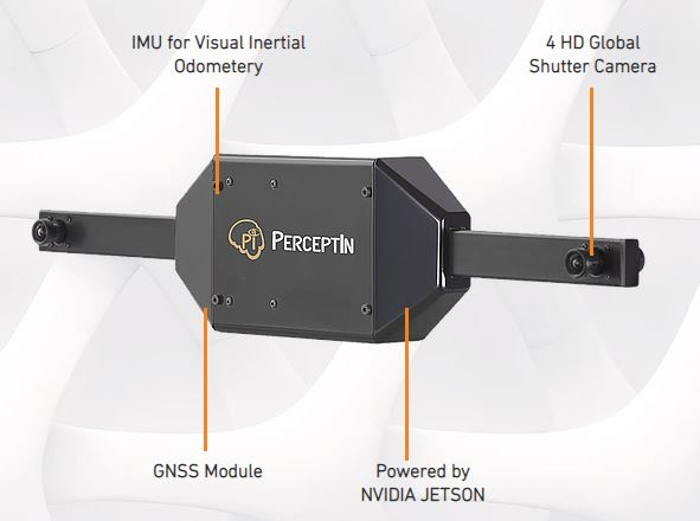

Dragonfly ROS node

Created a ROS node for straeming sensor data from Perceptin's Dragonfly computer vision module. Dragonfly vision module has 2 stereo pairs (front + back) and an IMU unit, all of which are hardware synchronized.

code

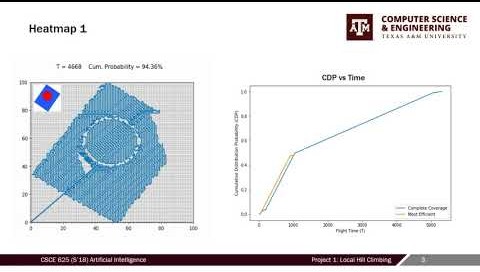

UAV Path Planning

The aim of the project was to generate a path for a UAV to traverse in order to find a missing person. As input, a probability density map was given which indicated the probability of finding the missing person in one of the grid locations. The path was generated such that the UAV prioritises locations that have a high probability density. A meta-heuristic algorithm was applied to generate the path, called Local Hill Climbing.

code video report

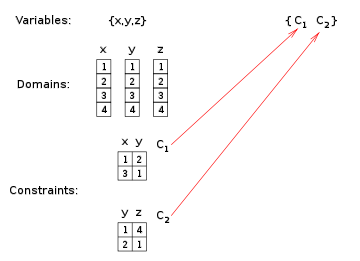

UAV Mission Planning

The aim of the project was to assign missions to pilots, respecting certain eligibility criteria. As input, 3 lists were provided. The first list included the pilots and the corresponding Aerial vehicles they can fly. The second list contained UAVs and the mission types they could support along with a quantity of each UAV type. The third and final list had bunch of missions along with their types. The mission planner had to assign each pilot to a UAV and a corresponding mission. All constraints had to be respected.

code

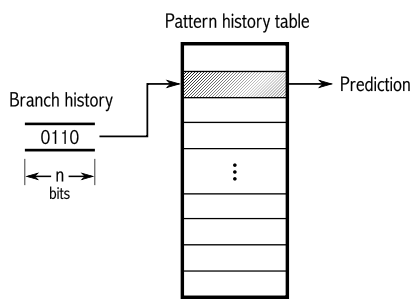

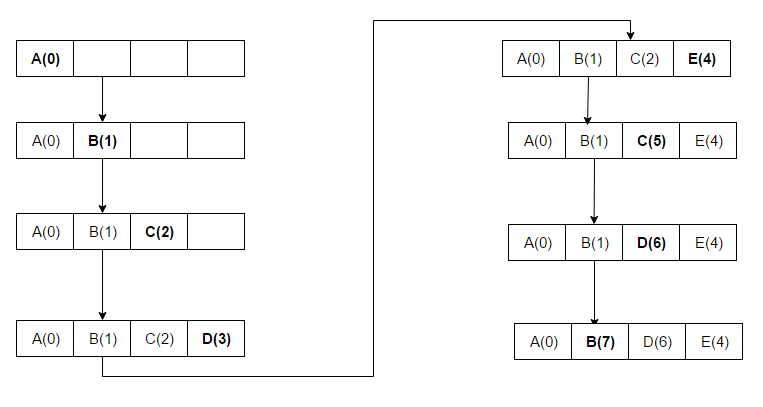

VPC Indirect Branch Predictor

Reproduced the Virtual Program Counter indirect branch predictor. VPC treats each indirect branch as multiple virtual conditional branches. As a result of which, the existing conditional branch prediction hardware can be used. A conditional branch prediction algorithm was also reproduced based on Merging Path and GShare Indexing Perceptron branch prediction.

code report

Cache Reuse Predictor

A cache reuse and bypass predictor for LLC using the perceptron learning algorithm. Based on Teran et al., MICRO 2016.

code report

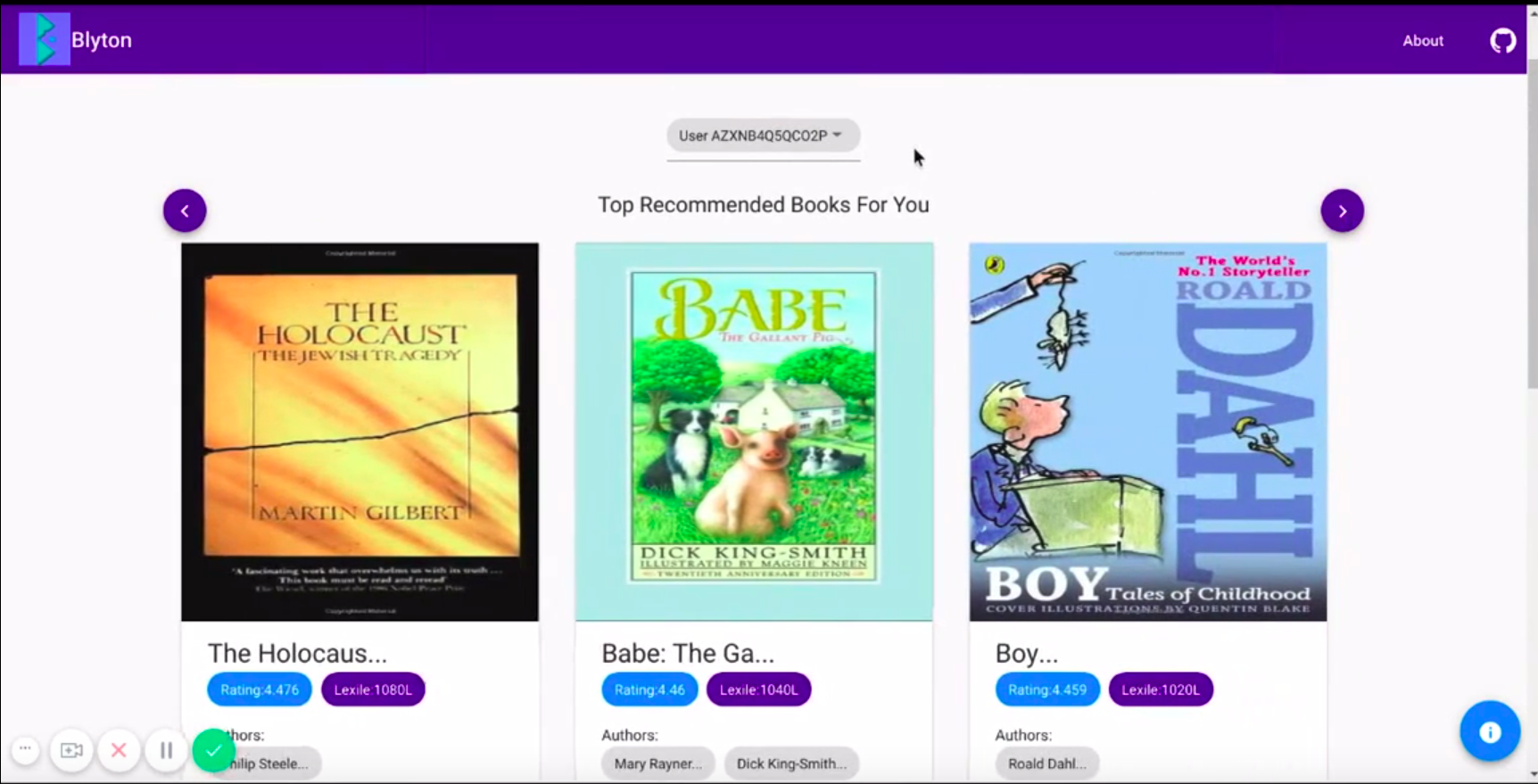

Blyton - Recommender System

Blyton is a unique book recommender system to generate recommendations for readers based on their guided reading levels.

code documentation video

Dronet on CoRL benchmark

The project aimed to benchmark Dronet's performance on Carla's CoRL 2017 benchmark suite. Dronet is a neural network that takes as input monocular images and provides 2 outputs: probability of collision aand steering angle. Unfortunately, the pre-trained network did not perform well as it was trained on real-life images. The network needs to be trained on synthetic images or some form of domain adaptation needs to be applied.

code

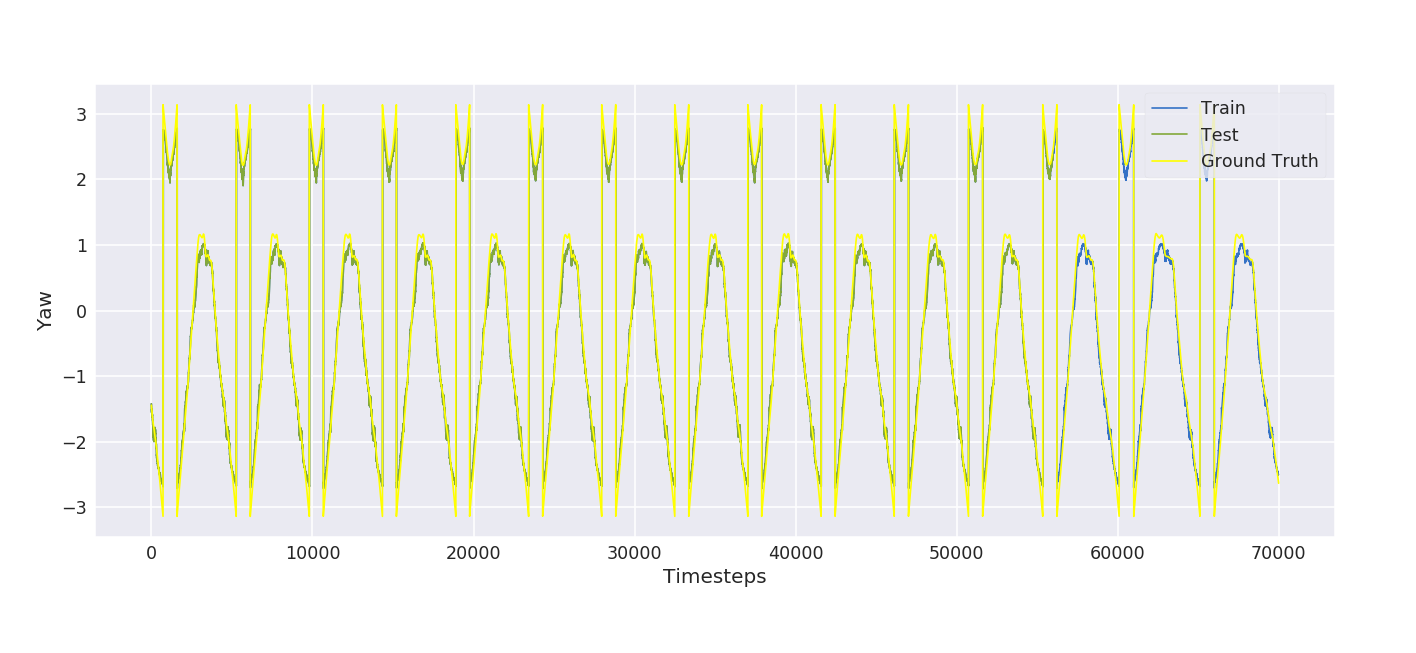

Yaw prediction from sensors

The project was to predict the yaw angles from raw sensor readings from an IMU. The aim of the project was to reverse engineer the Kalman filters used in expensive IMUs, so that a function could be learnt from sensor readings to orientation. The data was collected on a VectorNav IMU (VN-200) and a cheaper PixHawk IMU. The function approximator used was a recurrent neural network.

code

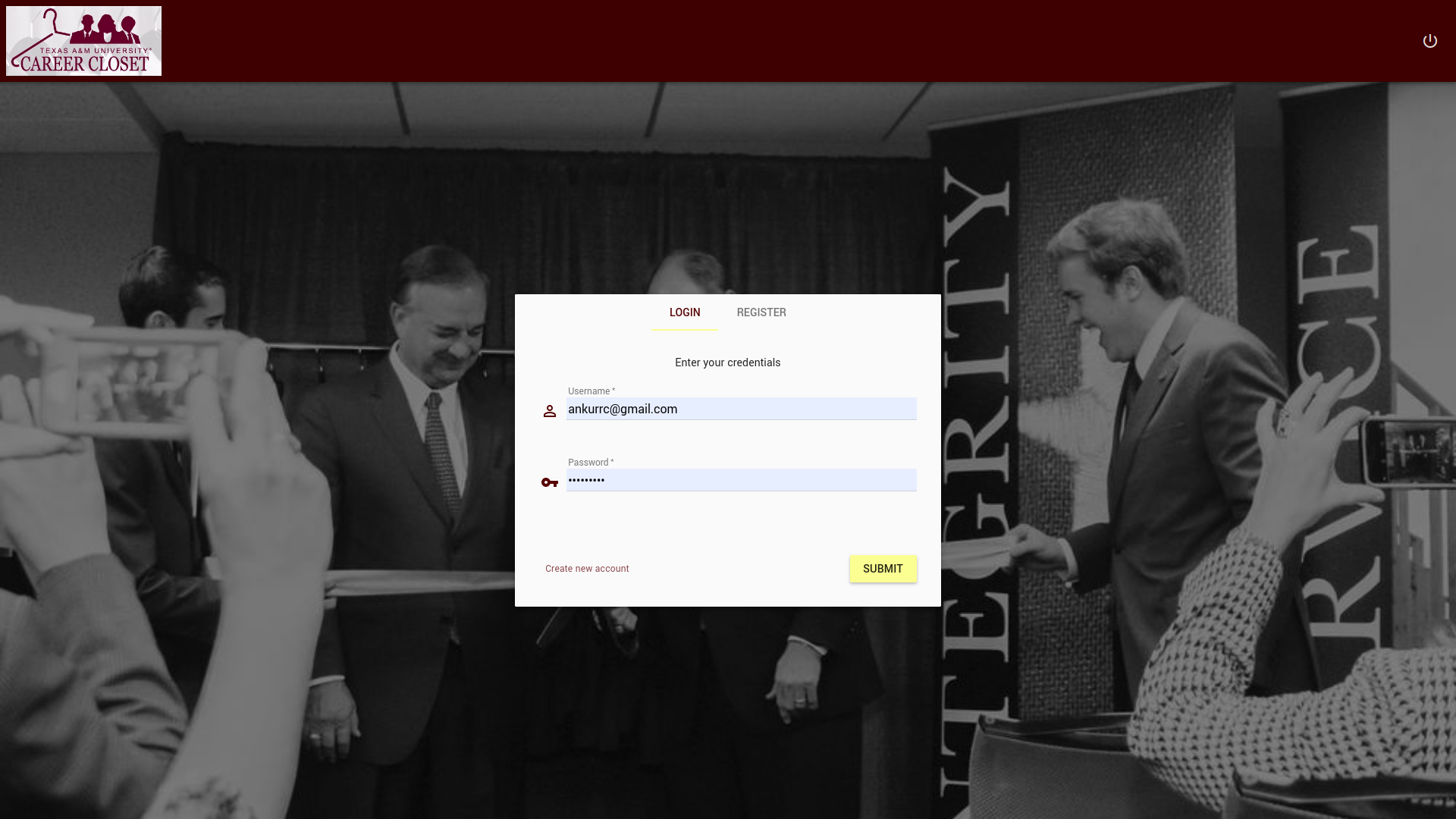

Career Closet - Webapp

TAMU Career Closet is an inventory management system built on Ruby on Rails and Angular 2. It helps administrators at TAMU Career Closet manage their inventory. For accessing the demo please enter the following credentials:

- Username: ankurrc@gmail.com

- Password: ankur@123